Video Transcript

If you operate a call center and are manually auditing the performance of your phone agents, then you should just stop wasting your time and money doing it.

Many call centers manually audit agent performance by listening to a sample of agent calls. There are so many problems with that. First of all, I've seen many auditors cherry-pick the calls they audit (such as picking shorter calls to review), which is basically biasing the data. Plus, the things they're listening for in their audits are very often subjective, such as assessing if the agent is "actively listening" or "building a rapport with the caller" or "resolving the issue".

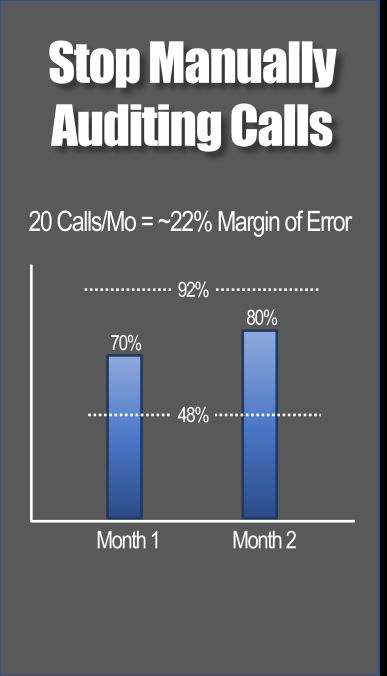

When you have qualitative info like that, this means all of the auditors need to be calibrated frequently to ensure they're following a clear and stringent set of standards for defining each of these performance issues they're listening for. All of that requires a lot of time and effort just to ensure the data being collected is unbiased and reliable. But even if they could do that perfectly, they still end up sampling only about 10 to 20 calls per month per agent. At best if they audit as many as 20 calls/mo per agent, that yields a 22% margin of error.

What does that mean? It means if they score the agent as being 70% successful, then the true average success rate could be anywhere between 48% and 92%. That's such a wide margin of error that it's practically impossible to statistically prove if the agent is performing better or worse month over month. To get a smaller margin of error where you can more reliably prove if they're performing better or worse means auditing several hundred calls per month per agent - and that becomes cost prohibitive.

So if you can't reliably prove if they're getting better or worse, then why bother measuring their performance like this at all? There are far better ways to more reliably measure agent performance using automation and speech analytics, and those measurements can be done automatically on nearly all agent calls. But if you're not going to invest the time, effort and money to develop better measurement tools like that, then at least save your money by not manually reviewing their performance.

.